Regardless of whether your program has integrated artificial intelligence into learning, your students have access to generative AI tools, which many already use. For faculty and administrators, the rapid evolution of these tools may evoke excitement about the possibilities for the technology to enhance learning. At the same time, this innovation prompts concerns about academic integrity and critical thinking. Both these responses are valid, and both point to the importance of an open dialogue with students about the place of AI in their education.

While many educators feel how urgent these conversations have become, they are uncertain how to approach the conversation. If you or your faculty members are in this position, explore these strategies for talking about AI use with students.

Why the AI talk needs to happen

The AI talk should happen sooner rather than later, even if the picture of AI’s place in your program is still coming into focus. There are three reasons for this urgency:

- Education: With the right use cases, AI tools can create a more efficient, personalized, career-ready learning experience. On the other hand, poor applications can hinder students from developing important skills like critical thinking and problem-solving. Even if your faculty members are still ironing out use cases for your program, they can be transparent about this, teaching students by modeling their own learning process in exploring new technologies.

- Ethics: Some students may already be using AI tools as part of their learning experience, whether faculty members approve of their use or not. While there’s room to refine a code of ethics over time, these students need to hear a strong message about academic integrity, why it matters, and where the principled guardrails for AI use in learning stand.

- Equity: Factors like socioeconomic status can influence a student’s access to AI tools, and some will be better acquainted with generative AI than others. Your institution can promote educational equity by teaching all students about AI and providing controlled access to these tools to everyone enrolled in its programs.

10 Tips for discussing AI with your students

Once your faculty members are on the same page about the need to discuss AI use in academics, you can implement a strategy for how to talk about AI with students. Keep these 10 tips in mind for a productive conversation on student AI use.

1. Educate yourself

Administrators and faculty should experiment with and learn about AI tools firsthand to bring an informed perspective to the conversation. That said, there’s no pressure to become an expert. It’s sufficient to know the main tools available, some principles for generating helpful prompts, how other institutions have incorporated AI into academics, and some background information about how AI models are trained. Modeling responsible curiosity in learning about AI can be valuable, so emphasize to students that faculty members can offer guidance and explain institutional policies, but are also on the learning journey alongside them.

2. Lead with questions through surveys

Approach the AI talk with empathy and open-mindedness. Some students may find AI intimidating and confusing. Others are eager adopters, but anxious about the possibility of disciplinary action if they think your institution opposes all generative AI use. To meet students where they are, distribute anonymous surveys with questions like:

- What do you know about how AI large language models work?

- Have you tried using AI in your personal life?

- Have you used AI to help with your studies or academic work?

- What AI tools have you used?

- Where have you found AI useful?

- What shortcomings do you think it has?

- How do you think AI could be helpful to your learning?

- How could it be harmful?

- Would you like to learn more about using AI?

Student responses to these questions can help you understand the experience and questions students already have. These insights can help structure the conversation and highlight the resources students need to navigate AI. Consider using student survey software for increased response rates and data visualization to get the most from these questionnaires.

3. Acknowledge the tools

Whether faculty approve of a given tool or not, it’s best to assume students know about or will discover it. This makes it crucial to acknowledge current and emerging AI tools they may encounter, while pointing out the strengths, weaknesses, and risks of each. Students are more likely to avoid a tool your institution disapproves of if they know about it, understand why they should steer clear of it, and can access approved alternatives.

4. Explain how students can use AI

Depending on a program’s learning outcomes, faculty, and students can discuss various use cases for AI to enhance the learning experience. When students understand these and how they relate to generative AI’s strengths and limitations, they are more likely to avoid improper uses. AI use cases to consider for your program include:

- Research summaries: An AI tool can become a student’s research assistant, summarizing study methodologies and conclusions before the student conducts their own in-depth analysis of the relevant sources.

- Brainstorming and outlining: AI tools can help students come up with assignment topics within their module’s parameters. They can also help generate research questions and project outlines.

- Practice questions: Students can prompt AI to generate practice test questions based on their course materials and learning outcomes.

- Grading and feedback: Lecturers can streamline their workload by having AI grade multiple-choice, true or false, fill-in-the-blank, and short answer questions. Students can gain additional feedback on their work by asking AI to evaluate them according to the course rubric, though they should cross-check AI feedback with their lecturer or tutor where necessary.

- Adaptive learning: AI algorithms can adapt personalized learning pathways for students in real time based on their performance. For example, the algorithm can assign additional practice questions in weaker areas or allow students who demonstrate exceptional understanding to move ahead to more challenging sections.

- Smart tutoring: AI chatbots can provide 24-hour support for student questions. If your program uses smart tutoring, advise students on which types of questions they can entrust to these chatbots and which they should take directly to their lecturer.

- Language services: AI translation tools can provide quick translations with a high and improving degree of accuracy. This function is especially useful for international students, provided they can also access human language services for more nuanced translation needs.

- Predictive analytics: AI-powered predictive analytics can help busy lecturers and support staff triage student needs and intervene when students are at risk of disengaging.

5. Inform about the risks

Help students recognize improper AI use cases, as well as the shortcomings AI tools have even with appropriate use. A sober perspective on AI should acknowledge:

- Biases and blindspots: Students should understand the basics of AI model training so they can appreciate the biases and information gaps that creep in from human labor and source material.

- Hallucinations and inaccuracies: Show that AI models make mistakes, so users must apply critical thinking and research skills to verify the content it generates. Hallucinations are one type of mistake where AI models generate false, misleading, or invented content. This can happen when a model tries to overcome a gap in its source data by generalizing or getting too creative. For example, an AI model asked to list journal articles on a certain topic may fabricate nonexistent articles.

- Environmental implications: Institutions should acknowledge AI’s growing energy demands. AI-related energy consumption is projected to grow by around 50 percent each year up to 2030. The data centers AI models rely on already consume about 4.4 percent of all U.S. electricity. Making AI more sustainable is becoming an important research area.

- Plagiarism: AI use must fall within the context of academic originality and integrity. This means students should express ideas in their own words or quote appropriate sources and acknowledge where they’ve found data through rigorous citations. When students learn new information from an AI tool, they should verify it using authoritative sources and cite those sources in their work. Remind students of your institution’s policies regarding plagiarism and originality, and discuss how AI relates to these policies.

- Privacy and security: Some of the most popular generative AI tools claim the right to harvest and share user data while offering limited transparency regarding the parties who gain access to this data, how they use it, and whether they protect it. Institutions should establish firm standards for cybersecurity and explore which tools meet them before approving student and faculty use.

- Improper use obstructing skill development: While teaching students about AI’s benefits for learning, help them understand how improper use could hold their learning back. For example, students who use generative AI answers uncritically in assignments miss opportunities to develop the creative problem-solving skills that employers value.

6. Provide practical exposure to academic AI use

Give students opportunities to learn about AI through guided experimentation. One type of experiment involves asking students to critique AI responses to in-class tasks, similar to constructive peer-to-peer feedback. For example, a math class could ask AI to solve a problem and show its work. Students would then need to check whether the steps are sound and whether the conclusion is accurate. If not, the teacher can ask students to explain where the tool went wrong. This is also an opportunity to discuss how large language models work and what causes their computational shortcomings.

An English class could apply the same principle by, for example, asking AI to write sonnets in the styles of Shakespeare and Petrarch. They could then discuss whether AI followed the conventions of these sonnets and captured the voice of each writer well, challenging themselves to improve on the AI-generated sonnets.

7. Invite engagement

Leverage multiple, diverse perspectives to define AI’s place in a program. This can be a dynamic, ongoing process of stakeholder engagement, including input from faculty members, students, and industry partners. One way to do this is through a collaborative, outcome-oriented approach to learning.

For example, let students know the intended learning outcomes for an upcoming assignment. One such outcome could be developing their research skills for finding and analyzing sources. The lecturer could distribute a questionnaire or facilitate a class discussion about how AI tools could work for or against this outcome. Some students may propose using AI as a search tool to create a list of potential sources. This would be an opportunity for the lecturer to discuss the risk of AI hallucinations, and how this use case may not work for the learning outcome.

Other students might suggest finding their own sources and then asking an AI tool to summarize the research methodologies and group similar studies for comparison. This may be a more viable use case. Either way, this kind of engagement promotes creative and critical thinking about AI’s potential uses.

8. Share an AI ethics code

Technological innovation and ongoing stakeholder engagement mean any detailed code of ethics for AI use must be a living document. That said, defining ethical boundaries is vital for guiding students in responsible AI use and holding them accountable. An AI ethics code should address:

- The institution’s overall attitude toward AI.

- The tools the institution considers secure.

- Acceptable use cases.

- Wrongful uses.

- AI detection protocols.

- The disciplinary penalties and procedures if faculty detects wrongful use.

- Program-specific use cases and boundaries.

9. Incorporate AI into programs

Once your institution has a preliminary code for AI ethics, best practices, and disciplinary procedures, gradual integration can begin. This could begin with responsible AI workshops and the incorporation of AI into in-class exercises.

10. Facilitate reflection

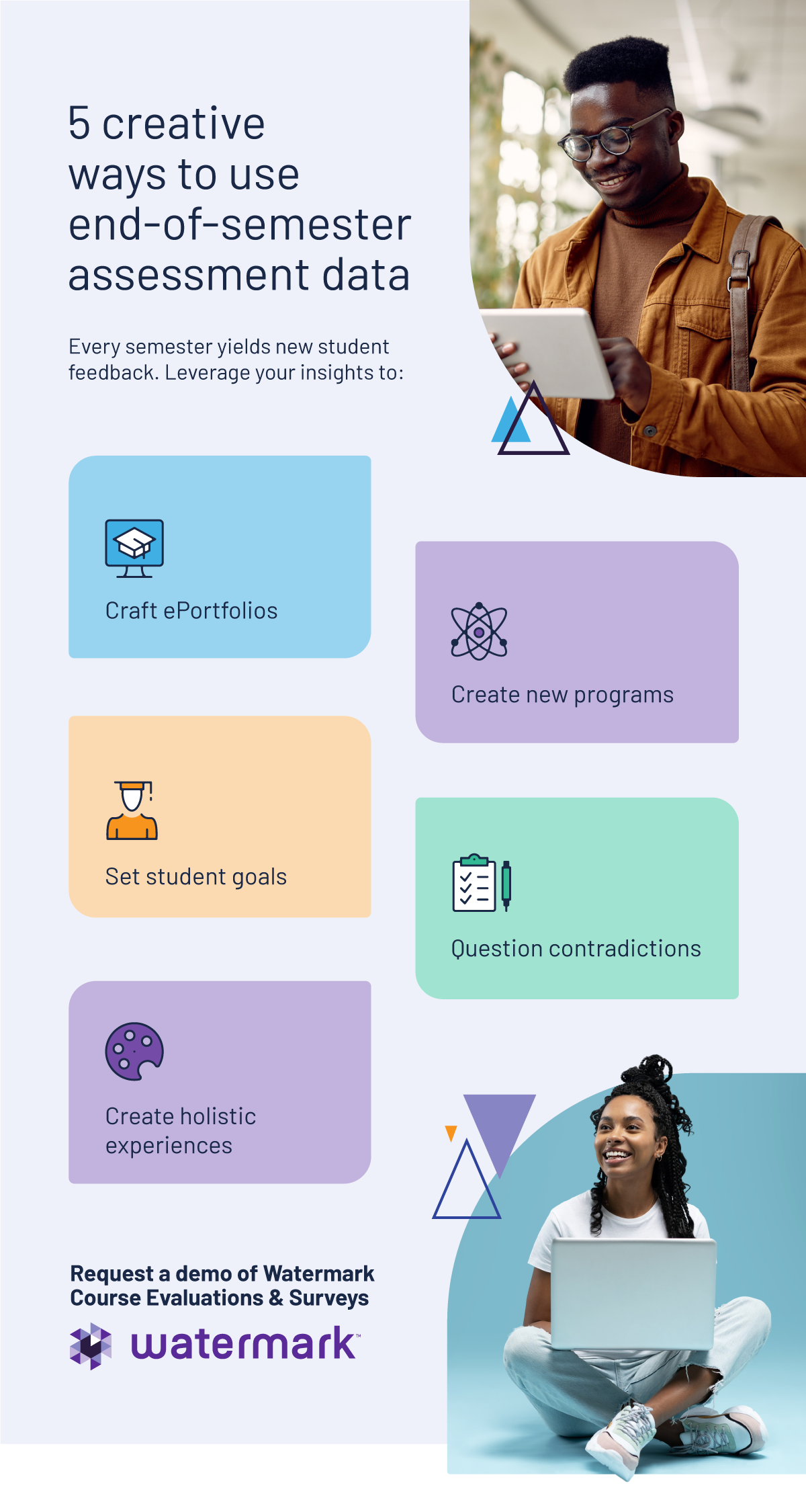

As AI integration proceeds, invite lecturers and students to share their reflections through surveys and midterm course evaluations. Engaging with diverse perspectives about how AI integration can help each program adapt to meet student needs. This may mean introducing new use cases, creating helpful resources, or updating disciplinary policies.

Support student success in the age of AI with Watermark

Navigating the AI conversation with students requires balancing openness to technological innovations with an abiding commitment to student success. As your institution considers how to integrate AI into learning, the Watermark Educational Impact Suite can provide a solid software foundation.

EIS is a centralized system of software solutions for higher education. It integrates with your preferred learning management system (LMS) and other Watermark products to provide valuable insights for assessment, accreditation, curriculum management, student success, surveys, and more.

As you explore what your students know about AI, try using Watermark Course Evaluations & Surveys within the EIS to make questionnaires accessible and improve response rates by 70 percent or more. If you want to model positive AI use, lean on Watermark Student Success & Engagement’s AI-powered predictive analytics to support the students who need help most. These are just two of the tools the Watermark EIS offers you for harnessing data-driven insights as you consider AI’s potential place in your programs.

Request a free demo of the Watermark EIS to explore how it can support your institution’s success.