Universities dedicate significant time, talent, and resources to the work of assessment. Most institutions consider assessment an important self-monitoring practice to ensure they are delivering on their mission and promises to students; they are also required by accreditors to perform assessment of student learning. But, as David Eubanks, assistant vice president for institutional effectiveness at Furman University and a former member of the AALHE (Association for the Assessment of Learning in Higher Education) board, noted, “The difficulty in using assessment results to improve academic programs is a recurring theme at assessment conferences.” It’s also a common theme in the higher education press.

Eubanks believes assessment results are difficult to translate into meaningful improvement because currently, “The methods of gathering and analyzing data are very poor.” Here, we’ll consider the challenges of gathering and analyzing data for assessment, and envision a path toward assessment practices enhanced by a culture of data sharing and technology that breaks down data silos to broaden the perspective on learning outcomes.

Why is “closing the loop” so hard?

In the past 20 years, several organizations and a vast body of literature have sprung up to establish and share assessment best practices. But in the seminal article Closing the Assessment Loop (PDF) by Trudy W. Banta, professor of higher education and senior advisor to the chancellor for academic planning and evaluation at Indiana University-Purdue University Indianapolis, and Charles Blaich, director of the Center of Inquiry in the Liberal Arts at Wabash College, the authors raised an important question: “With so much good advice available, why are improvements in student learning resulting from assessment the exception rather than the rule?”

Several factors make it difficult to successfully close the assessment loop:

- The labor of capturing and analyzing data

- The difficulty of ascribing meaning to learning outcomes in the absence of contextualizing data that may reveal a root cause and/or solutions that lie outside of curriculum or teaching practices

- The challenge of connecting data to generate insights, and how technology can support culture and processes that can translate insights into action

Capturing and analyzing assessment data

Linda Townsend, director of assessment at Longwood University and board member of the Virginia Assessment Group, described assessment challenges for Longwood faculty and staff seen over a number of years. Methods for collecting data varied across programs and depended on the skill set of those who led a program’s assessment process. From communicating with other faculty to obtain student data to manually compiling data in spreadsheets or tables in word processing documents for analysis and discussion with faculty, the workload made assessment into an onerous task. “Through one-on-one discussions with program coordinators, it became clear to me that we needed some way to collect assessment data and get it in their hands so that they don’t spend hours and hours trying to pull it all together themselves,” Townsend said. Additionally, Townsend found that sometimes people would leave and those files would be lost.

When using technology to manage data captured for assessment, user friendly software is vital, according to Tara Rose, director of assessment at Louisiana State University and a member of the board of directors of AALHE. “Since faculty do not use the software daily, they have to relearn the same system every year and that’s frustrating,” Rose said. This can sour perception of both the system and assessment as a process. “I want to change that mindset,” Rose said. “Users should have the experience you have when you go into a Google product where you just know what to do. It should be easy. The system should also be customizable and flexible to support their specified assessment processes.”

Intuitive software is critical for supporting faculty in assessment planning and closing the loop as well as minimizing the number of places that faculty have to go to collect and analyze various types of data for assessment. Before adopting an assessment solution for Longwood, “We actually held focus groups with administration, faculty, and staff to obtain input on what they wanted. What kept coming up over and over is an integrated platform allowing for multiple methods of data collection,” Townsend said. “They wanted a system that was more than just for assessment reporting—the whole gamut of data in one place so it’s accessible for continuous planning, assessment and reporting for the institution and accreditation. They don’t want to have to go to multiple places to pull this all together.”

Assessment should also tell stakeholders something they couldn’t have found out any other way, with some assurance that the information is accurate. “Assessment data isn’t research-level, peer-reviewed data, but you have to have some level of confidence in your data,” said Patricia Thatcher, associate vice president of academic affairs at Misericordia University. “And your data has to be easily available in a useful form.” One program at Misericordia already had a sense that its students weren’t doing well synthesizing ideas, but analyzing student data turned a gut perception into actionable information. “When they saw data that says 65 percent of their majors aren’t doing very well with synthesizing ideas, they set out to address that,” Thatcher said.

Escaping the data (and culture) silo to gain context

An additional challenge comes with considering data beyond that captured in student assessments. “A challenge remaining in assessment is that data is still very much siloed; offices are siloed too,” Rose said. “Institutions live in silos, as do the institutional effectiveness offices, institutional research offices, and teaching and learning offices. It has a lot to do with culture, and that’s one of the biggest challenges that institutions still face.”

Experts like Vince Kellen, CIO at the University of California, San Diego, agree. “Data must be freed from silos and transcend traditional hierarchies, making data hoarders a thing of the past,” Kellen wrote. “In this new world, information is no longer power. Information sharing is power. … information management is a team sport. People don’t own data; rather, they steward it.” Kellen, who also serves on the UCSD’s Chancellor’s Cabinet and as CFO of the UCSD senior management team, recommends that institutions work to establish a new culture to achieve this data democratization.

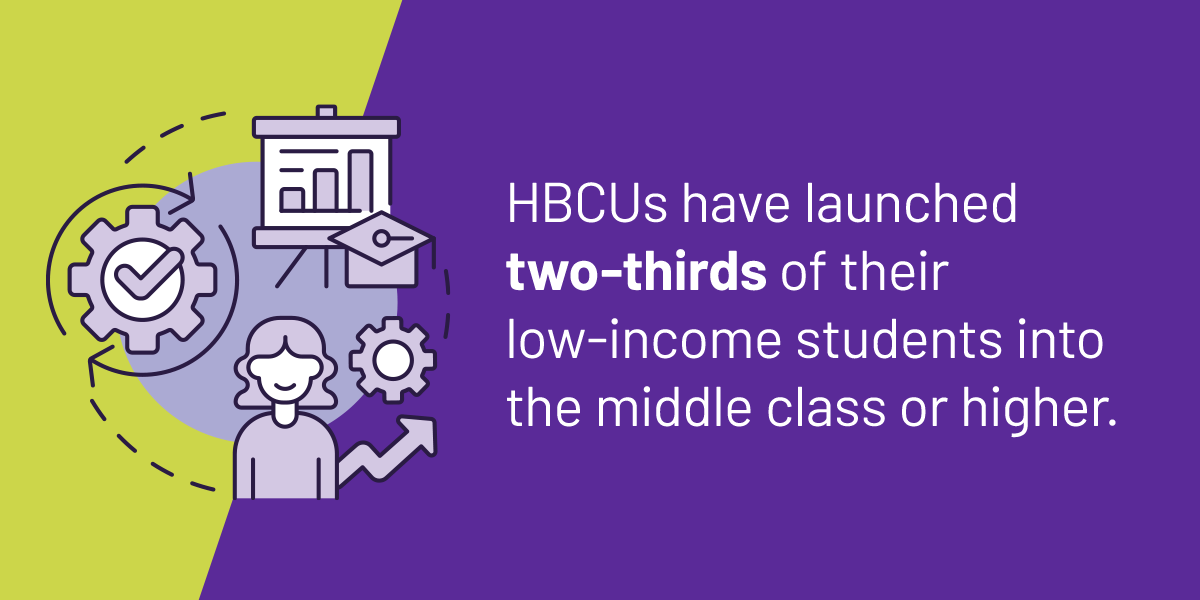

“Institutions have offices that do many different things. Are we sharing that information across offices, units, and programs so faculty can actually use the information to make decisions? Assessment is the process of systematically pulling together information, evaluating it, and making change,” Rose said. “It’s not just about student learning outcomes assessment. Assessment is about assessing program effectiveness, general education, student retention and graduation rates, student career data, and institutional effectiveness. It’s making sure that programs are aligning to the institution’s mission.”

Without the data from all the siloed offices across campus; programs, faculty, and administrators find difficulty in making connections necessary to gain insight and make change. “If a program is assessing student learning but they’re not looking at, for example, alumni data or post-graduation success career data, etc., how can they make information-based decisions about their program?” Rose said.

Breaking down data silos within point solutions used by offices or colleges is critical to taking the friction out of assessment and other processes of inquiry. “We can work on our communication processes as an institution, but we need to have a set of tools that everybody, or a broad swatch of stakeholders on campus have access to so everyone can see, well, if I take on this initiative, it links beautifully with our institutional strategic plan,” Thatcher said. “Being able to know that without an exchange of email that takes days or weeks is really important because we’re all doing so many different things. Data integration sets up the possibility of continuous improvement in instruction and student learning.”

Moving toward “meaningful, manageable, and sustainable” assessment

Rose clarifies the term “meaningful assessment” with a question: Can faculty and other stakeholders actually make change and continuously improve based on the information that they’re getting back?

“We don’t want faculty to just to go in and collect data year after year and say no improvements needed,” Rose said. “We want faculty engaged in the assessment process. Engagement can move faculty forward in thinking about what improvement means for their students and for their programs.”

In the current environment of skepticism toward the value of secondary education, it’s critical for institutions to be able to demonstrate learning outcomes and meaningful improvement based on what’s learned through assessment. “We’re in higher education at a really stressful time,” Thatcher noted. “Integrated technology is a great way for institutions to become more self aware, which will help inform improvement and innovation. If you don’t have data, you really can’t innovate in a way that will improve your institution’s viability or quality.”

Education technology providers have begun to converge point solutions, moving toward consolidated systems. These must be flexible enough that different programs and offices can get the insights they need. “I always express to faculty that I want them to consider three things in terms of the assessment process. It has to be meaningful, manageable, and sustainable. They are the discipline experts,” Rose said. “As assessment practitioners, we need to let them figure out what works best for them in their programs. We need technology to work for every program, for every college. It should not be a one size fits all.”

Eubanks recognizes the power of assessment that leverages campus data for greater insight. “There is more data available than ever before, computation is cheap, and new methods for visualization and analysis abound,” Eubanks noted. “We can imagine a future where assessment leaders work closely with institutional researchers and scholars to create and share large sets of high-quality data. Assessment conferences could then be about what we discovered and how faculty are using that information.”

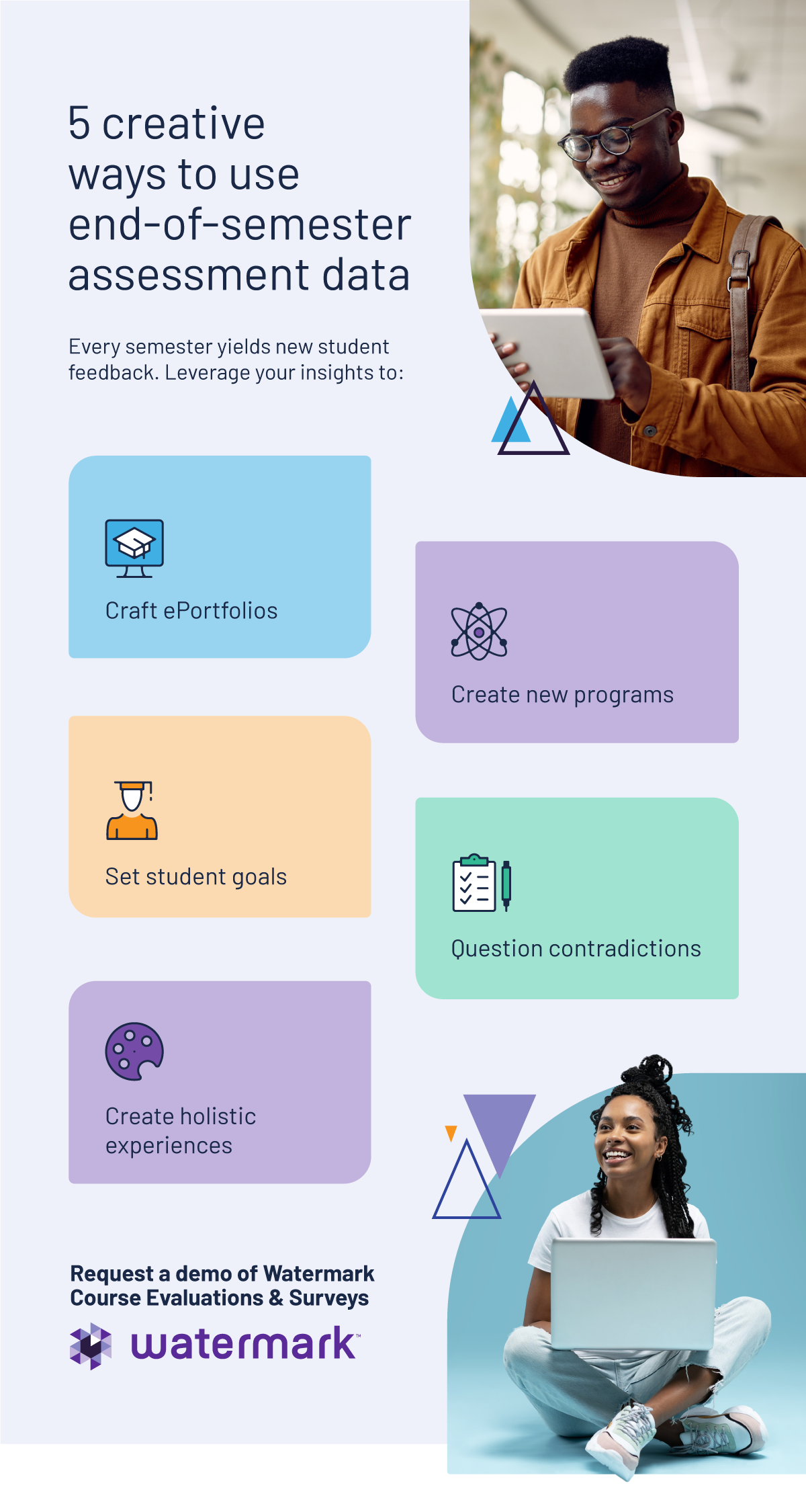

To support Eubanks’ vision for assessment, emerging assessment solutions should be:

- Intuitive and aligned with existing campus structure and processes

- Flexible to support various types of assessment and stages of assessment maturity, yet structured enough to guide users through established best practices

- Comprehensive enough so that users don’t have to log into multiple locations to access various types of assessment data, including student learning outcomes and program effectiveness data

- Integrated with other campus systems, including the SIS and LMS, so that assessment findings can be informed by the context of other institutional effectiveness data, including student retention

Colleges and universities need a software suite capable of simultaneously driving critical action in leadership, student success, and continuous improvement. Our Educational Impact Suite (EIS) eases the process of capturing student artifacts, facilitates assessment in the context of other relevant campus data, and guides participants through an institution’s established processes. We want to help you spend your time on what really matters — truly understanding student and institutional outcomes to chart a course toward meaningful improvement.