Colleges and universities help students expand their horizons, achieve new heights, and prepare for their careers. To maximize the positive impact on a student body, higher education institutions can implement continuous improvement through various worthwhile initiatives that encourage ongoing growth and positive transformation.

Inspiring lasting cultural change within your institution takes commitment from the community. We are breaking down numerous ways to instill the values of continuous improvement into your college or university to inspire long-term progress and advancement.

What is continuous improvement?

Continuous improvement refers to the ongoing process of making gradual tweaks and advancements within an organization to achieve better performance, operational efficiency, and overall quality.

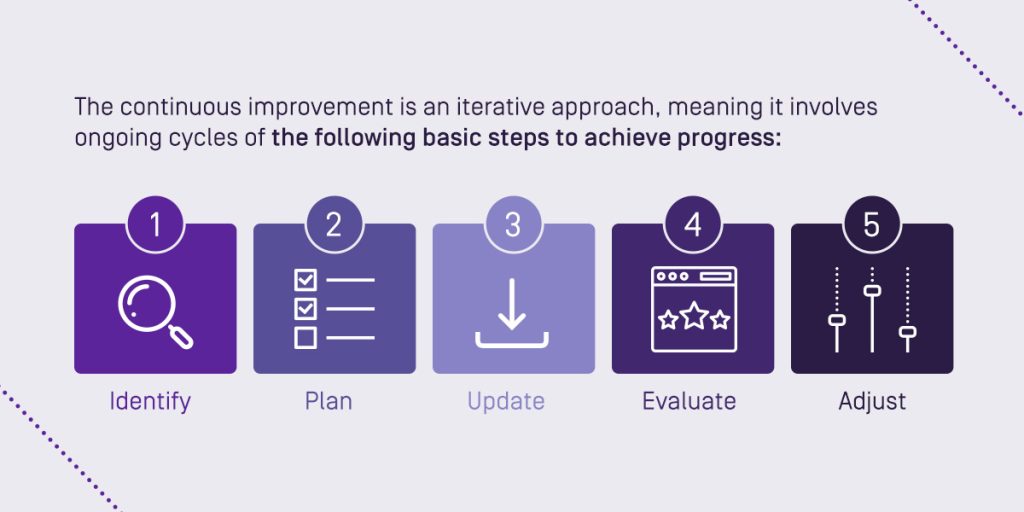

The continuous improvement is an iterative approach, meaning it involves ongoing cycles of the following basic steps to achieve progress:

- Identify: Consider the areas within your operation that could use updating and refining to benefit your students and staff.

- Plan: Work with your faculty and community to collaborate on a plan of action that moves the institution closer to its goals.

- Update: Implement the changes and advancements into your processes.

- Evaluate: Assess the updates you’ve made and determine if they could benefit from further tweaks.

- Adjust: Refine your updates and make changes to promote further advancement.

Continuous improvement can become sewn into the culture of the organization, inspiring years of positive growth and ultimately creating a higher education institution that delivers outstanding student outcomes.

The concept of continuous improvement is rooted in the idea that there is always an opportunity for betterment. People often compare it with concepts such as Kaizen, a Japanese principle of improving all functions at every level of an operation to achieve optimal efficiency and quality.

Various industries, sectors, and businesses, including higher education institutions, can capitalize on continuous improvement principles to supercharge their missions.

Principles of continuous improvement

In addition to taking an iterative approach, the key principles of continuous improvement include:

- Involvement at every level: Continuous improvement is ultimately a team effort that requires all individuals to actively participate in discussions and engage in efforts to benefit the organization as a whole. Every individual contributes to success with their own skills, experience, insights and perspectives.

- Data-driven decision-making: Another important aspect of this process is using data and measurable outcomes to determine the effectiveness of your organization’s improvement efforts. These insights help teams gauge what tweaks to make for your operations next.

- Minimizing waste: By taking steps to refine and enhance your systems, processes and resources, your organization can effectively reduce waste in the form of inefficiencies, losses, or fruitless activities and programs.

- Inspiring long-term growth: Creating a continuous improvement culture within your organization helps guide your operations toward lasting growth and success.

Continuous improvement can help businesses and organizations reap many benefits. Higher education institutions can use these principles to maximize student and faculty experiences to create the most advantageous academic environment possible.

The importance of building a culture of continuous improvement

Continuous improvement in higher ed is highly valuable, helping institutions stay ahead of the curve and remain competitive. The following are key benefits of actively improving your processes:

Enhance student experiences and learning outcomes

The most advantageous and important aspect of creating a culture of continuous improvement and ongoing learning within your higher education institution is improving student experiences.

Students are the lifeblood of every college and university. Prioritizing their learning outcomes through small upgrades, changes, and tweaks to your processes demonstrates your institution’s commitment to their success.

Refining everything from curriculums to assessments to technology can supercharge your institution and deliver the best possible experiences for learners.

Boost collaboration and positive transformation

A huge advantage of instilling continuous improvement into the culture of your higher education institution is bringing your community together. Collaborating and getting input from the individuals in every facet of your organization allows for better camaraderie and teamwork, making the whole community feel valued.

With more people contributing to your institution’s improvement mission, achieving positive transformation and innovation is easier.

Increase productivity and efficiency

Taking steps to continuously improve your processes can boost productivity and efficiency across your entire institution.

By making your operations more effective, you can save time, money, and energy to redirect toward efforts that help both faculty and students succeed.

How to create a culture of continuous improvement in higher education

Explore the many ways your college or university can instill continuous improvement into its culture for long-term success:

1. Work with your administrators

Your institution’s administrators have an incredibly important responsibility to foster, manage, and organize many functions across your campus. Involving them in the brainstorming and planning processes for continuous improvement efforts is key to getting the full scope of your institution’s overall performance. They will play an integral role in identifying the areas that could use improvement and getting the resources to make these changes.

Be sure to invite these figures into your plans for continuous improvement and encourage their active participation in these efforts to maximize your results. Clearly communicate how your institution will directly benefit from making persistent modifications and upgrades to your inefficient and ineffective processes. Describe the short-term and long-term goals that you can reach with the support of your administrators, and don’t be afraid to ask for their help.

2. Encourage collaboration and participation at every level

Your administrators are not the only individuals who should be privy to your higher education institution’s continuous improvement efforts. Every member of your faculty — from professors to department heads to janitorial staff to teaching assistants — contributes to the success of your initiatives. The more people you have on your team, the sooner you will reach your objectives and start seeing real progress on your campus.

Encourage and promote collaboration with every person who invests time in your university or college, whether digitally or in person. The student body’s input is equally important when it comes to making positive changes. Invite them to help your mission to improve your institution at every level by asking for feedback and engaging in conversations about opportunities for growth.

Creating specific spaces, like hosting meetings or creating a digital forum for discussion and collaboration, can be highly beneficial for getting everyone on board with your plans for continuous improvement.

3. Establish a leadership committee

Harnessing a culture of ongoing learning and betterment takes time and commitment. One of the best ways to ensure your institution’s efforts leave a lasting impact is by creating a leadership committee dedicated to spearheading and assessing the effectiveness of your continuous improvement initiatives.

The committee can lead in facilitating open communication between students, faculty, administrators, and stakeholders, keeping your institution’s community up to date on the latest progress. If someone has an idea for a new improvement plan, they can turn to the leadership committee for guidance on the necessary next steps.

Having a team devoted to these efforts will help your college or university build on its growth year after year, keeping the momentum going and driving long-term advancement. These individuals will also advocate for important endeavors designed to benefit the institution.

4. Share your institution’s vision for the future

The primary goal of continuous improvement on college campuses is creating a better future. Your higher education institution should have a clear vision of how to achieve these objectives.

Collaborate with your community to determine where your college or institution should focus its efforts. You’ll want to choose initiatives that will maximize the benefits for your faculty and student learning outcomes.

Once you have a sense of your college or university’s vision for the future that aligns with its key values and missions, you can use that vision to bring your community together. Emphasize the benefits of striving towards excellence and innovation both as a whole and individually. Highlight the power of teamwork and having a clear vision in mind when working toward new and exciting goals.

5. Motivate your faculty and staff

To get the most out of your college or university’s continuous improvement initiatives, you have to motivate your teams. Your institution’s faculty and staff are mission-critical. Consider implementing incentives to boost participation and inspire more members of your community to roll up their sleeves and get to work.

Some helpful tips for motivating your employees include:

- Recognition: Highlight individuals who make a huge difference in your efforts to create more efficient processes or better curriculums publicly, like in your monthly newsletter or on your institution’s website. Celebrate their wins and encourage them to keep up the hard work. Recognition is one of the best ways to boost worker engagement and productivity.

- Involvement: Another great method for motivating your faculty is getting them involved in your continuous improvement efforts as much as possible. Invite them to meetings, ask for their input and share regular updates about your institution’s progress. Keeping them in the loop will help them feel valued and more invested in the work.

- Rewards: Rewarding hardworking employees is a great way to incentivize their participation in your improvement plans. Consider giving the members of your team who create the biggest impact prizes like monetary bonuses, additional paid time off, or promotions to keep the ball rolling.

6. Provide professional development opportunities

Another useful way to instill a culture of continuous improvement in your higher education institution is to provide plenty of growth opportunities for your faculty. When your staff constantly learns, grows, and sharpens their skills, your college or university directly benefits. Encourage your team to attend events like professional workshops, seminars, and conferences. If your budget allows, consider covering the costs for them to remove financial hurdles that may stop them from attending on their own.

Implement new training programs to help the team master new skills and connect with other educators. By providing these types of professional development opportunities, your institution can demonstrate its commitment to excellence and ongoing learning. Plus, your college or university can enjoy additional benefits, including higher faculty retention rates and attracting top talent.

7. Share the successes and the growth opportunities

In addition to recognizing individuals for their hard work and commitment to your continuous improvement goals, celebrate the successes of your college or university as a community. Spotlight the changes that have positively impacted your institution, whether it be a minor or massive update. Public acknowledgment of positive improvements can inspire everyone to work a little harder and achieve these goals together.

With that said, don’t be afraid to share the initiatives that are not working. When striving toward continuous improvement, you may fail from time to time in making a system or curriculum better. Instead of sweeping those failures under the rug, discuss them openly with your community. Ask for feedback on how to do better, and use that input to inform your next steps toward a viable solution.

8. Monitor and track progress

As your institution embarks on the journey of continuous improvement, monitoring your progress along the way is key. Finding measurable performance indicators can be a great way to assess the effectiveness of your initiatives and whether or not they require more attention.

Tracking your progress allows you to gauge your success, collaborate on the best action plan to get better, and adjust strategies as you go. Using a software solution that captures key performance data is an invaluable tool for colleges and universities looking to supercharge their missions. Your leadership committee should regularly review and assess your data to ensure your plans are working as intended.

9. Ask for ongoing feedback

To optimize your institution’s continuous improvement efforts and create a culture of ongoing learning, getting feedback is a must. Encourage your faculty, administrators, and stakeholders to reflect on the changes they experience on campus. Ask them to share their input and opinions on making your initiatives more effective.

Empower your students to share their thoughts as well. Send out surveys, invite them to meetings, and demonstrate how their insights will inform the next phases of improvement. The more feedback your institution receives, the more information you have to use when making important decisions about your college or university’s future.

10. Promote innovation and experimentation

Innovation is a driving force for continuous improvement, so creating an environment where your students and staff feel comfortable taking calculated risks is highly beneficial.

Doing is sometimes the best way to learn, and thoughtful experimentation can offer incredible insights that your teaching staff and students may never have stumbled across otherwise. Encourage your professors to explore new technologies and try different teaching methods.

Consider how your higher education institution can support its community as they take new risks and aim for the stars.

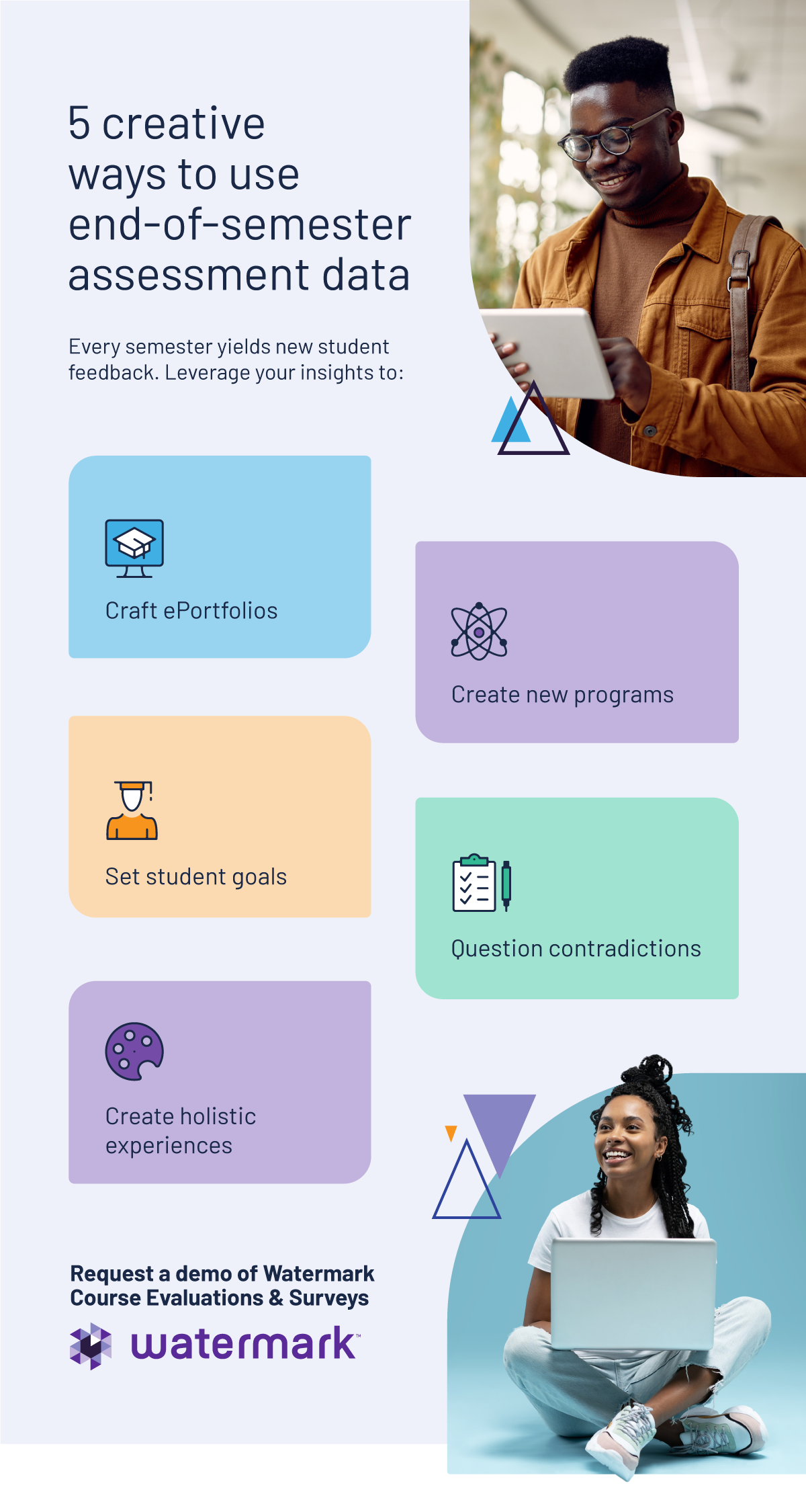

Support continuous improvement at your higher education institution with solutions from Watermark

If your college or university is looking to foster continuous improvement, turn to Watermark. Our higher education software solutions help streamline your daily operations by simplifying data collection and automating time-consuming activities, giving your faculty more time to focus on student outcomes.

With Watermark, you get access to the important data to inform your institution’s decision-making and drive continuous improvement at every level.

Are you interested in learning more about strategy planning and accreditation software for higher education from Watermark? Contact us or schedule a demo of our solutions today.