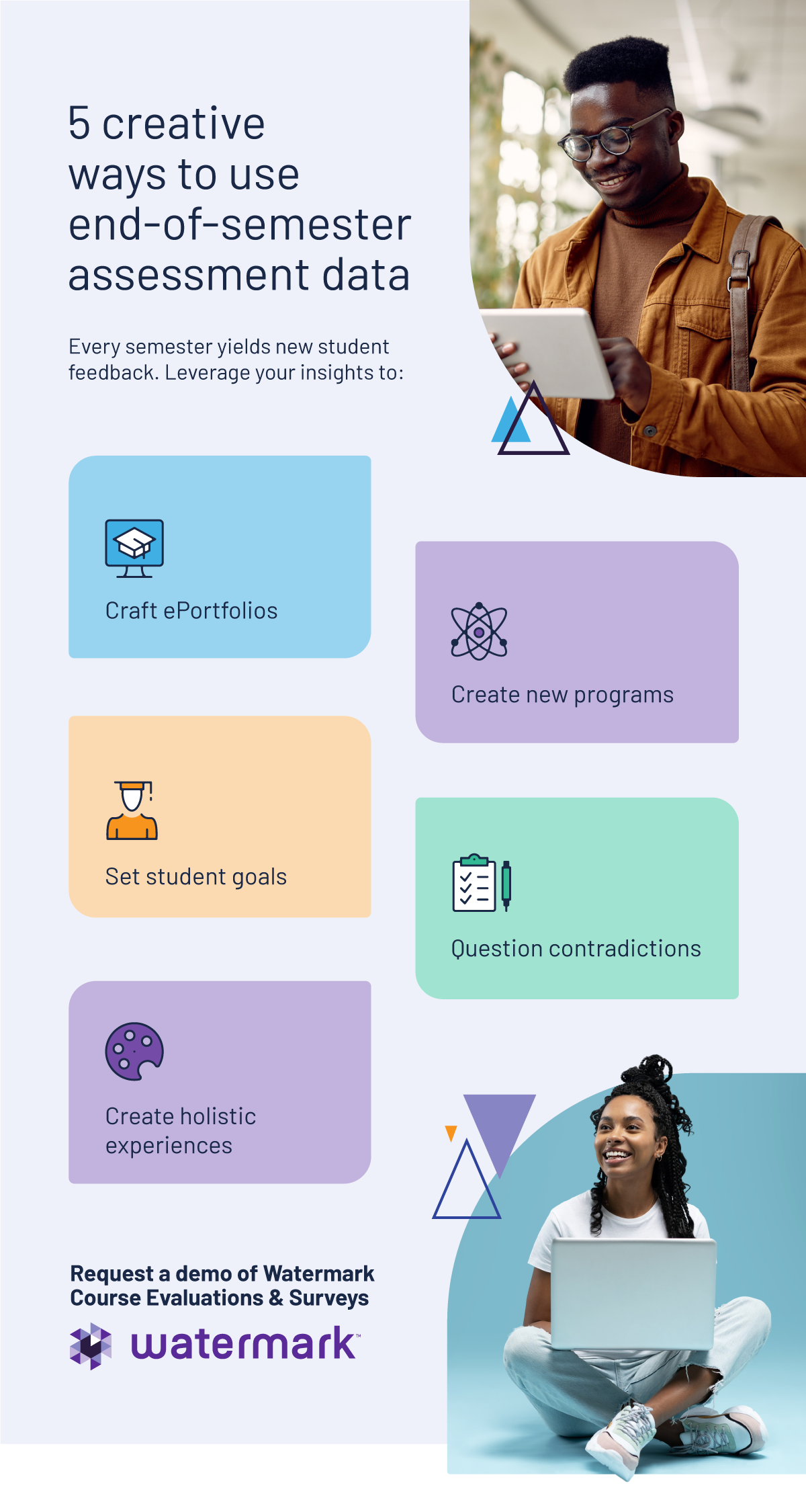

At the end of each term, you dutifully distribute course evaluations and collect feedback from students, striving for 100% response rates. Now that you’ve gathered all this data, are you taking the right steps to review and analyze it?

Your campus may pull information from evaluations for specific scenarios — faculty annual reviews, departmental planning sessions, accreditation reporting — but there are greater opportunities to use a course evaluation report as part of your data-driven decision-making process.

Reporting vs. Analysis

First, it’s important to understand the difference between reporting and analysis. Reporting is the process of gathering information and feedback (data points) about what is happening on campus. Analysis requires using the data to generate insights and answer strategic questions.

Reporting and analysis are both essential steps in the data-driven decision-making process, but they’re only truly valuable if they drive action. Therefore, it’s important to create a course evaluation report sample that gathers the specific information you need to uncover opportunities for improvement, spend time analyzing the data you gather with a critical eye, and then build a plan of action to apply what you learned and make adjustments.

Course Evaluations: What’s Your Goal?

When it comes to course evaluations, there are many ways to build reports that slice and dice the feedback you gather from students each term, including data on instructor performance, course resources and structure, and the overall learning experience.

Standard reports from Course Evaluations & Surveys (formerly EvaluationKIT) let you review results by course, but you can also aggregate data to more closely examine different areas of the institution, run batch reports to pull multiple and combined reports for offline discussion, and pull detailed feedback for administrators and instructors.

At the end of an evaluation period, faculty and administrators review how the last term went and apply these insights as they plan the next session. Standard course evaluation reports can be run for each course to review metrics specific to a term. These reports offer a static snapshot of the data that can be downloaded in PDF format.

Throughout the year, it’s also important to review evaluation data at the department, college, and university levels to monitor trends and make adjustments. The report builder gives you more flexibility and allows users to create customized reports that pull specific data for review. These reports can be copied and reused in future terms and across departments. By using the same evaluation structure every term, you’re able to create a body of data that allows you to track trends over time. One valuable report in Course Evaluations & Surveys (formerly EvaluationKIT) is the Instructor Means report, which generates the average scores for all instructors who report to a department, school, or other area.

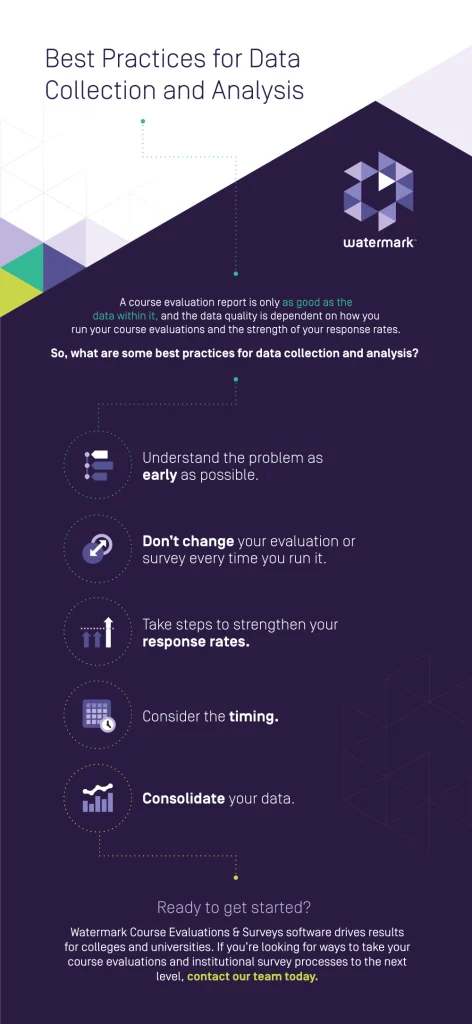

Best Practices for Data Collection and Analysis

A course evaluation report is only as good as the data within it, and the data quality is dependent on how you run your course evaluations and the strength of your response rates.

Understand the Problem as Early as Possible

Every time you collect information, you should know how you plan to use it. Define your pain points early and understand the problem you’re facing so that you can better craft your solutions. This understanding will equip you to prepare meaningful assessments and evaluations.

Don’t Change Your Evaluation or Survey Every Time You Run It

Many faculty members review their results right after the evaluation period closes, digest the feedback, and implement changes the next time they teach the course. However, there is also value in building a longitudinal set of data over time. By keeping your course evaluation questions and structure consistent, you’re better able to spot trends and review shifts both for individual instructors and across the department as a whole.

Take Steps to Strengthen Your Response Rates

There are many tactics that can help boost response rates, including integration with your learning management system (LMS), a thoughtful communication strategy, and a strategic evaluation structure. Check out our guide for more helpful tips to boost response rates.

Consider the Timing

When you’re launching digital course evaluations, it’s important to think not only about how long to leave evaluations open but also about when you start and stop collecting information. Consider when you’re distributing evaluations, be strategic in developing your evaluation structure and question format, and eliminate any points of friction for students. (For example, blocking students from taking their final exam in the LMS until their evaluation is complete may lead to skewed results.)

Consolidate Your Data

Quickly finding and accessing meaningful data will aid in the analysis process. Having a clean and actionable data view will make it much easier for you to determine which information will be most beneficial, ensure you have every piece of data in one place for easy trend-tracking and regular review, and help you determine how to write a course evaluation report.

Real Results From Watermark

Watermark Course Evaluations & Surveys software drives results for colleges and universities. Our solution enables institutions to apply student feedback to the bigger campus picture. With our software, you can easily capture and analyze data to create action plans that drive change on campus.

The Watermark Course Evaluations & Surveys solution simplifies the evaluation process and enables faculty and staff to access relevant data. With automated distribution, customizable reports, and a repeatable evaluation process, you can boost student response rates and keep all relevant data in one convenient location. Other colleges and universities have already made the switch to Watermark and have seen impressive results.

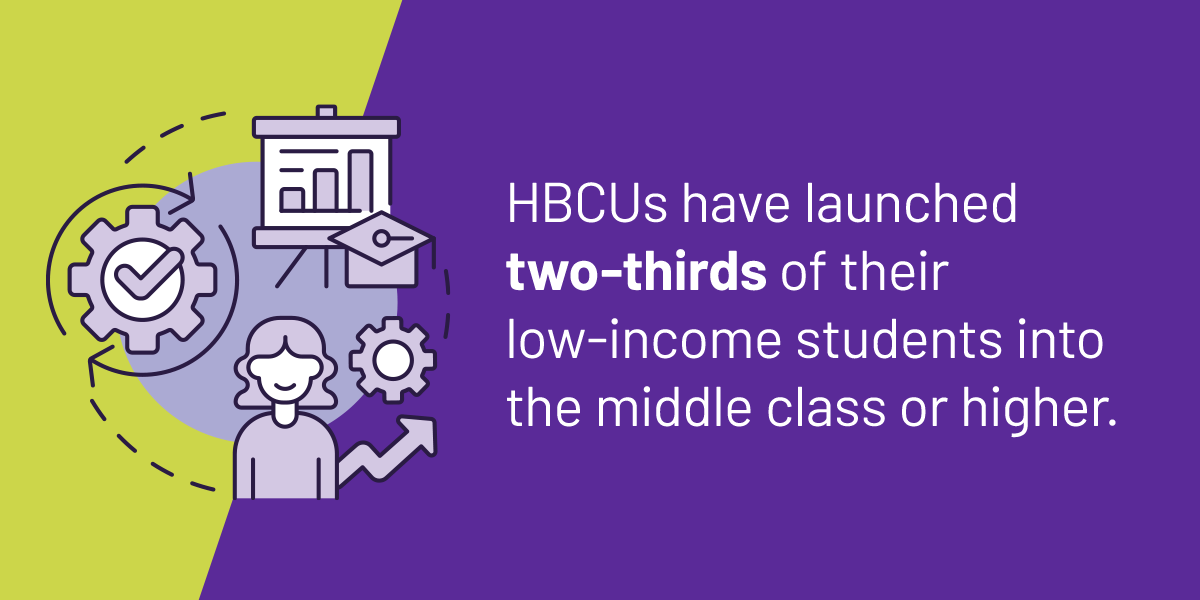

Higher education institutions have seen:

- Boosted response rates: Emory University saw a 78.7% response rate in course evaluations, leading to more than 23,000 evaluations for over 1,000 sections. Paperless course evaluations provided an “excellent” or “mostly good” experience for 92% of students.

- Effortless reporting: Roanoke-Chowan Community College batched reports seamlessly and compiled data to review trends. Faculty could more easily discover information for their department and better turn the data into action.

- An ability to save time: Embry-Riddle Aeronautical University stored hundreds of surveys to add to or replicate existing projects. This process significantly saved time for the administrative team.

- Better reporting: Baylor University was able to deliver timely feedback and provide deeper engagement with students. Using real-time data syncs, Baylor obtained more accurate data about student status in their courses.

Reporting Different Stats

When you choose Watermark Course Evaluations & Surveys, you can develop simpler and more effective course evaluations. For quick trend-tracking, you can pull various reports. For instance, when crafting a course evaluation report example, you can compare information across cohorts and combine data from multiple sections of the same course to create a complete picture of an educational program. You can review trends and note where pain points and strengths appear.

Additionally, you can compare results from multiple years, pull departmental reports that aggregate evaluations, and access aggregated dashboards from all areas of your institution. Create email notifications and access deep integration to your learning management system to boost response rates and ensure that you hear your students’ voices. Through an intuitive user experience, our software will guide students to their evaluations until they’ve completed them.

It’s Time to Level Up With Watermark!

Watermark has crafted innovative higher education software solutions for more than two decades. People are at our center, but data drives us. We know meaningful information is a catalyst for change and want to help you seamlessly collect, review, and distribute data that can improve your campus and student experiences.

Watermark was built for higher education. We help colleges and universities make a difference and obtain data to drive their missions. With Watermark, you can create robust plans for continuous growth and improvement at your institution in a fraction of the typical time. Don’t leave valuable data sitting in your course evaluation system. If you’re looking for ways to take your course evaluations and institutional survey processes to the next level, contact our team. We’re here to help!